Ch2: Preliminaries (預備知識)

Colab: ch2-1 (The output of all programs will be displayed on colab.)

講義地址: Heptabase 首頁

參考書籍: Dive into deep learning

上課日期: 2024/3/5, w3

# Data Manipulation

# Tensors: Arrays of Data

- What are tensors?

- Tensors are multidimensional arrays (可以是任意維度) used for storing and manipulating data in deep learning.

- 是所有深度學習框架(如 PyTorch、TensorFlow、MXNet)中的基本資料結構。

- Creating Tensors in PyTorch

import torchx = torch.arange(12) #, dtype=torch.float32)

print(x.numel())

print(x.shape)

- torch.arange: Creates a 1D tensor [0..11].

- tensor.numel: Returns number of elements in the tensor.

- tensor.shape: Provides the dimensions or shape of the tensor.

- 特殊的 Tensor 初始化

zero = torch.zeros((2, 3, 4)) # or [2,3,4]

print(zero)

one = torch.ones((2, 3, 4))

print(one)

rnd = torch.randn(3, 4)

print(rnd)

t = torch.tensor([[2, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

print(t) #若沒有加中括號 '.tensor (1,2,3)',則默認為 3*1,shape=[3]

- torch.zeros: 產生全 0 的 tensor.

- torch.ones: 產生全 1 的 tensor.

- torch.randn: 產生全來自 standard Gaussian distribution (即標準常態分布,平均為 0 且標準差為 1) 的 tensor. (n for normal, 亦有

rand()函式 → 從0~1隨機抽取) - 亦可從 python list 來產生

- Reshaping Tensors

X = t.reshape(2, 6)

print(X)

Y = t.reshape(-1, 3)

print(Y)

Z = t.reshape(4, -1)

print(Z)

- Reshape functions 中的

-1表示自動計算 the appropriate size for the specified dimension. - 注意:維度要相容,否則會報錯 (如: 12 → 2*4 → ERROR)

- Reshape functions 中的

# Indexing and Slicing

- Indexing Tensors

- Tensors can be accessed using indices (索引), similar to Python lists.

- 從

0開始,且-n表示倒數第 n 個元素。

X = torch.arange(12)

print(X[0], X[1])

print(X[-3])

- Slicing Tensors

- 用來存取 sub-sections of a tensor.

- Use

start:stop:stepas the syntax for indexing a subset of elements, 其中start + k∗step < stop−1 < start + (k+1)∗step,且預設是從0~n+1, 步伐為 1 (因不含上界,∴共 n 個元素) - 正向操作範例

X1 = X[1:3]

print(X1)

X2 = X[:3]

print(X2)

X3 = X[2:8:2]

print(X3)

X4 = X[:]

print(X4)

- 反向操作

print(X[-1])

print(X[:-1])

# print (y [::-1]) python list 特有的倒過來數功能 - When slicing, if only one index or range is specified, it defaults to operating along the first axis (axis 0).

Y = X.reshape(4,3)

print(Y)

print(Y[0])

- 多個元素可以同時被賦予相同的值。

Y[:2, :] = 12

print(Y)

# 運算

- Elementwise Operations (逐位元運算)

x = torch.tensor([1.0, 2, 4, 8])

y = torch.tensor([2, 2, 2, 2])

print(x + y, x - y, x * y, x / y, x ** y, sep='\n')

torch.exp(x)

- Concatenation of Tensors

torch.cat((X, Y), dim=<0 or 1>)dim = 0(axis 0) 表示從下往上,往 x 軸合併起來dim = 1則是從右往左,往 y 軸合併起來

X = torch.arange(12, dtype=torch.float32).reshape((3,4))

Y = torch.tensor([[2.0, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

torch.cat((X, Y), dim=0), torch.cat((X, Y), dim=1)

- Logical Operations

- Tensors 之間可用

X == Y或是<和>)

X = torch.arange(12, dtype=torch.float32).reshape((3,4))

Y = torch.tensor([[2.0, 1, 4, 3], [1, 2, 3, 4], [4, 3, 2, 1]])

X == Y - Tensors 之間可用

- Aggregation Functions (總結 (聚合) 函數)

- Include operations like max(), min(), sum(), mean(), which allow you to aggregate the values of a tensor along a specified dimension.

print(X)

print('-'*10)

print(X.sum(), X.mean(), sep='\n')

print('-'*10)

print(X.sum(dim=0), X.sum(dim=1), sep='\n')

print('-'*10)

print(X.mean(dim=0), X.mean(dim=1), sep='\n')

print('-'*10)

print(X.min(dim=0), X.min(dim=1), sep='\n')

print('-'*10)

print(X.max(dim=0), X.max(dim=1), sep='\n')

# Broadcasting (廣播)

- 廣播在操作過程中會自動進行張量維度擴展。(因為維度相同才可運算)

- Two-Step Process

- Expand dimensions.

- Perform elementwise operation.

a = torch.arange(3).reshape((3, 1))

b = torch.arange(2).reshape((1, 2))

c = a + b

print(c)

- Rules of Broadcasting (PyTorch 會自動做)

- Tensor Dimensions Alignment: Compare size of each dimension from last to first.

- Compatibility of Dimensions: Dimensions are compatible if they are equal or one is 1.

- Expansion of Fewer Dimensions: Add dimensions of size 1 at the beginning if necessary.

- Size 1 Dimensions Stretching: Adjust dimensions of size 1 to match the other tensor.(只要有

1就可以擴充) - No Stretching for Non-1 Dimensions: 如果尺寸不同且都不為 1,則會出錯。

- Example of broadcasting steps

A (8, 1, 6, 1) and B (7, 1, 5)- Align shapes: (8, 1, 6, 1) and (1, 7, 1, 5).

- Stretch dimensions of size 1.

- Final shapes: both become (8, 7, 6, 5).

- 好處

- Memory Efficiency: 透過避免直接的複製資料來減少記憶體使用。

- Code Simplification: 消除了需要手動去匹配張量形狀的操作。

# Python 中的記憶體高效操作

- Inefficient Memory Allocation

- Memory Allocation for Operations:

Y = Y + Xcreates new memory forY + X. (因為 python 中,assign 動作是貼標籤在記憶體上) - 不用擔心舊的記憶體,因為 python 有 garbage collection 機制,但因為又要找新記憶體,又要回收舊記憶體,所以導致時間的浪費。

X = torch.rand(2,3)

Y = torch.rand(2,3)

print(id(X), id(Y))

Y = Y + X

print(id(Y))

- Inefficiency Reasons:

- Unnecessary Memory Allocation: 頻繁為操作分配新記憶體。

- Multiple References Issue: 潛在的內存洩漏或引用到已經淘汰的記憶體。

- Memory Allocation for Operations:

- In-Place Operations in PyTorch

- 用 slice notation

Y[:] = ...to update in-place.

Z = torch.zeros_like(Y) # shape=y 的 zeros 陣列

print('id(Z):', id(Z))

Z[:] = X + Y # In-place update

print('id(Z):', id(Z))

- 用 slice notation

- 減少記憶體使用

- Use operations like

X[:] = X + YorX += Yfor variables not reused later.

X = torch.rand(2,3)

Y = torch.rand(2,3)

before = id(X)

X += Yid(X) == before

- Use operations like

# 型態轉換 (between 傳統的 numpy 和新的 PyTorch)

- Tensor-NumPy Conversion

- PyTorch tensors and NumPy arrays 共享底層內存。

- 印出來時,

python list有,,而numpy array沒有。 - Tensor to NumPy:

A = X.numpy()

print(A)

- NumPy to Tensor:

B = torch.from_numpy(A)

print(B)

- Types Confirmation:

type(A), type(B)

- Size-1 Tensor to Scalar Conversion

- .item(): Direct conversion to Python scalar.

a = torch.tensor([3.5])

a, a.item(), float(a), int(a)

# Data Preprocessing (資料預處理)

# 用 Pandas 讀取資料集

- Creating and Writing to a CSV File

import os# The 'exist_ok=True' parameter allows the function to continue without raising an error if the directory already exists.os.makedirs(os.path.join('.', 'data'), exist_ok=True) #join('.', 'data')->'./data'

# Define the path for the data file to be created. This uses 'os.path.join' for compatibility across different OS.data_file = os.path.join('.', 'data', 'house_tiny.csv')

# Write mode will create the file if it does not exist or overwrite it if it does.with open(data_file, 'w') as f:

# 'NA' is used to represent missing values.f.write('''NumRooms,RoofType,Price

NA,NA,127500

2,NA,106000

4,Slate,178100

NA,NA,140000''') - Loading Data with Pandas

- Importing pandas:

import pandas as pd - Reads the CSV file into a pandas DataFrame.

import pandas as pd

# 'pd.read_csv()' is a function in pandas used to read a CSV file and convert it into a DataFrame.# A DataFrame is a 2-dimensional labeled data structure with columns of potentially different types.data = pd.read_csv(data_file)

# This will display the contents of the CSV file as a table with indexed rows and named columns.print(data)

- Importing pandas:

# 資料準備

- 輸入 - 目標分離:區分特徵和標籤。(for 監督式學習)

- Handling Missing Values

- Missing values represented as

NaN. - 神經網路只能處理 number (string 不可),所以 Nan 要被編碼。

- One-Hot Encoding with pd.get_dummies():

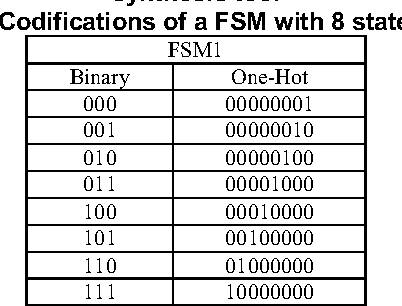

![One-hot 和一般的編碼不同之處 one-hot]()

- Converts categorical variables to numerical format.

dummy_na=Trueincludes a column for NaN values.

# Splitting the DataFrame 'data' into inputs and targets for machine learning or data analysis purposes.# 'data.iloc[:, 0:2]' selects all rows (:) and the first two columns (0:2) from the DataFrame. These are the input features.# 'data.iloc[:, 2]' selects all rows (:) and the third column (2) which is assumed to be the target variable.inputs, targets = data.iloc[:, 0:2], data.iloc[:, 2]

print(inputs)

# Convert categorical data into dummy/indicator variables.# 'pd.get_dummies()' is a function that converts categorical variable(s) into dummy/indicator variables.# 'dummy_na=True' includes an additional column for missing values (NA) which are present in the dataset.inputs = pd.get_dummies(inputs['RoofType'], dummy_na=True, prefix='Label')

# This DataFrame now contains binary columns for each category in the original data,# including additional columns for handling missing values (NA).print(inputs)

import pandas as pd

# Create a sample DataFramedata = {'Category': ['A', 'B', 'A', 'C', 'B'], 'Name':['John','Mary','Joe','Tom','Harry']}

df = pd.DataFrame(data)

print(df)

# Use pd.get_dummies to convert the 'Category' column into dummy variablesdummy_df = pd.get_dummies(df, prefix='Category')

# # Concatenate the dummy variables with the original DataFrame# df = pd.concat([df, dummy_df], axis=1)print(dummy_df)

- Missing values represented as

- Converting NAN Data

- Strategy: Replace missing values with mean or median.

# Filling missing values in the DataFrame 'inputs' with the mean of each column.# 'inputs.fillna()' is a function that fills NA/NaN values using the specified method.# 'inputs.mean()' calculates the mean of each column in the DataFrame, ignoring NaN values.# This method is often used to handle missing data in machine learning and data analysis.inputs = inputs.fillna(inputs.mean())

# This DataFrame now has missing values replaced by the mean of their respective columns.print(inputs)

- Strategy: Replace missing values with mean or median.

- Conversion to Tensor Format

- Framework compatibility: For use in deep learning frameworks like PyTorch.

- Conversion process: Convert DataFrame to NumPy array then to PyTorch tensor.

import torch# Convert the pandas DataFrame 'inputs' to a numpy array and then to a PyTorch tensor.# 'inputs.to_numpy(dtype=float)' converts the DataFrame to a numpy array of type float.# 'torch.tensor()' converts the numpy array into a PyTorch tensor, which is used for computations in PyTorch.X = torch.tensor(inputs.to_numpy(dtype=float))

y = torch.tensor(targets.to_numpy(dtype=float))

# 'X' is the tensor containing input features, and 'y' is the tensor containing target values.print(X, y)

# Bonus 1 題目

- Convert each sample (row) into numeric data by performing one-hot encoding on non-numeric columns (Name and Gender).

- Produce a tensor to store the BMIs for the 20 persons.

- Compute the average BMI for the 20 persons.

- Find the student who has the highest BMI.

解答地址: Colab